Augmented Reality Deep Dive – What can we do with AR?

One of the hottest new technologies in software development is augmented reality (AR). This is not the kind of technology we use in a common workday. So we did a hackathon with the entire team to familiarize ourselves with this new “kid” on the block.

Before we can tell you more about the hackathon we have to explain the bigger picture first. One of the reasons to look into augmented reality is the following: We would like to build an app that is capable of recognizing that the camera is looking at specific buildings. Once this happens it should also be capable of showing a text or video. The most difficult part of this plan is, of course, the detection of (looking at) specific buildings. Therefore we created three different tracks which we thought would all bring us closer to a proper final solution.

The three tracks are:

– Location tracking

– Compass

– AR anchors

Location tracking

The first one is putting Augmented Reality content (markers) on GPS coordinates. So when a user comes near the location and points its phone toward the coordinate, it should show the marker. If this would work as expected, we could put markers on the GPS coordinates of the specified buildings.

After playing with it for several hours we identified the following problems:

the markers jump all over the scene and the size changes over time

the markers disappear sometimes and you have to search the room to get them back

two markers with the same GPS location are not drawn on the same location in AR.

So this technology is not ready for prime time (yet). We think a lot of these problems are caused by our indoor tests. Therefore we also think that taking this outside will improve the performance quite a bit.

Compass

GPS data alone would not be sufficient, the application also needs to know what the camera is looking at, for this we use the bearing of the compass. The second track was using the compass (of a phone) to see where somebody is looking at. This is important because initial research showed that the accuracy of the compass leaves a lot to be desired. So this track was about finding out how inaccurate the compass really is.

For the compass track, we tried two approaches: compass data directly from the phone or through the ARCore framework. In the end, there is only one big difference between both approaches. The compass data obtained through the framework is wildly inaccurate when the phone is level (with the ground).

Furthermore, they share the following observations:

There is a lot of drift in the direction of the needle (depending on the phone it is between 10 and 20 degrees off)

High-end phone seem to have better compasses than low-end phones

Manual calibration of the compass helps a little (but not enough)

The compass seems to suffers a lot from (magnetic) interference from laptops or other phones nearby

The mentioned drift is too much to tell if the user is looking in the direction of the building. With a drift of 10 degrees it would seem that the building is on the screen, but in reality it will not. So again not good enough to use right now. We do think that repeating this exercise outdoors would improve the outcome (so less drift). The main reason for this is less magnetic interference.

AR Anchors

The last track was about using ARCore anchors to see if the building is on your screen. An ARCore anchor is something you put in the augmented world and then it should be visible on other phones as well.

At the start of the Augmented Reality hackathon, we played with a quick demo for cloud anchors and came to the conclusion that it was worth investigating as it seemed to solve our problem. During the hackathon, we started to dig deeper into the technology and started experimenting.

The conclusions were quite disappointing. We had mistakenly identified ARCore anchors as a magic bullet that would solve our problems and we quickly ran into some interesting behaviour:

The anchors seemed to disappear over longer distances (more than 10 meters).

While anchors could be shared with other devices, setup of them looked to be all manual not programmatically.

The anchors lived only a short period of time (24h).

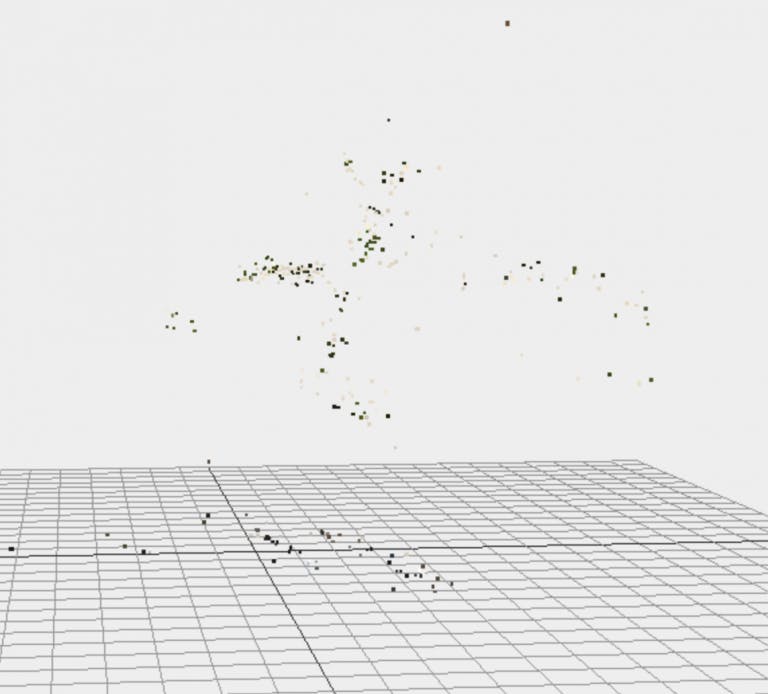

The reason for this is that cloud anchors are created by taking screen input and building a 3d profile based on that screen input from multiple angles. This profile then is stored in the Google cloud and compared to the data currently on the screen constantly. If there is a match then the anchor is displayed. If we could visually display these profiles they would look something like this:

One challenge with this is that if you increase the distance and make the object or scene you are looking at smaller, you will have fewer reference points and the match will become harder to make.

Because the cloud anchors are volatile in nature with a lifetime of only 24 hours, they were not created to be able to make permanent profiles. Furthermore not being able to setting them up programmatically is an issue. So AR anchors will also not solve our problem.

Conclusion

Our preliminary conclusion is that all tested techniques seem not good enough to achieve our goal of recognizing (looking at) specific buildings. We will investigate further on these follow-up items:

– look into existing AR frameworks (mapbox, wikitude & blippar)

– look into image recognition

– look into existing apps (that do something similar)

– test GPS & compass outdoors

Thanks for reading and stay tuned for the next update in our AR adventure!

Robin & Bouke